컴퓨터/노트북/인터넷

IT 컴퓨터 기기를 좋아하는 사람들의 모임방

단축키

Prev이전 문서

Next다음 문서

단축키

Prev이전 문서

Next다음 문서

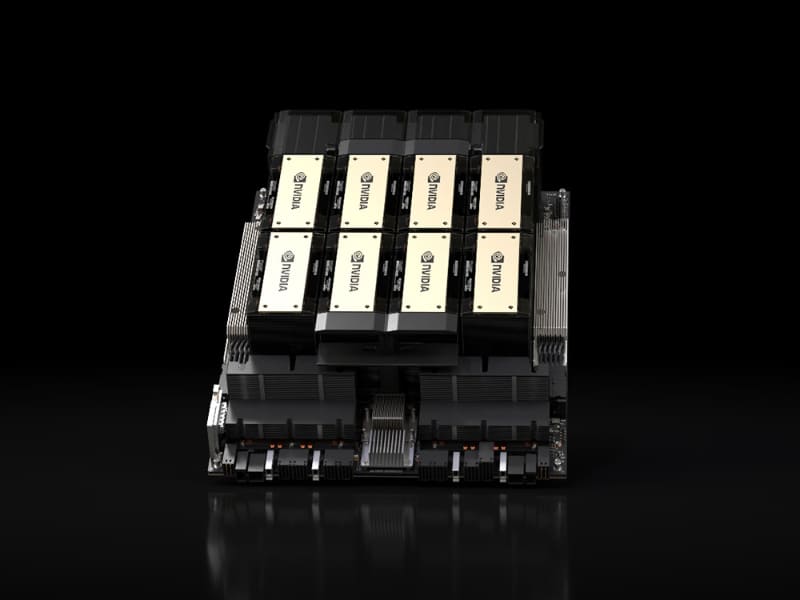

NVIDIA가 호퍼 아키텍처 GPU와 HBM3e 메모리를 탑재한 H200, 그리고 HGX H200을 발표했습니다.

메모리 대역폭은 4.8TB/s, 용량은 141GB로 H100보다 대역폭이 1.4배, 용량이 2배로 늘었습니다. 그래서 Llama2 70B는 1.9배, GPT-3 175B는 1.6배의 성능 향상이 있습니다.

SC23—NVIDIA today announced it has supercharged the world’s leading AI computing platform with the introduction of the NVIDIA HGX™ H200. Based on NVIDIA Hopper™ architecture, the platform features the NVIDIA H200 Tensor Core GPU with advanced memory to handle massive amounts of data for generative AI and high performance computing workloads.

The NVIDIA H200 is the first GPU to offer HBM3e — faster, larger memory to fuel the acceleration of generative AI and large language models, while advancing scientific computing for HPC workloads. With HBM3e, the NVIDIA H200 delivers 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the NVIDIA A100.

H200-powered systems from the world’s leading server manufacturers and cloud service providers are expected to begin shipping in the second quarter of 2024.

“To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory,” said Ian Buck, vice president of hyperscale and HPC at NVIDIA. “With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

Perpetual Innovation, Perpetual Performance Leaps

The NVIDIA Hopper architecture delivers an unprecedented performance leap over its predecessor and continues to raise the bar through ongoing software enhancements with H100, including the recent release of powerful open-source libraries like NVIDIA TensorRT™-LLM.

The introduction of H200 will lead to further performance leaps, including nearly doubling inference speed on Llama 2, a 70 billion-parameter LLM, compared to the H100. Additional performance leadership and improvements with H200 are expected with future software updates.

NVIDIA H200 Form Factors

NVIDIA H200 will be available in NVIDIA HGX H200 server boards with four- and eight-way configurations, which are compatible with both the hardware and software of HGX H100 systems. It is also available in the NVIDIA GH200 Grace Hopper™ Superchip with HBM3e, announced in August.

With these options, H200 can be deployed in every type of data center, including on premises, cloud, hybrid-cloud and edge. NVIDIA’s global ecosystem of partner server makers — including ASRock Rack, ASUS, Dell Technologies, Eviden, GIGABYTE, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron and Wiwynn — can update their existing systems with an H200.

Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among the first cloud service providers to deploy H200-based instances starting next year, in addition to CoreWeave, Lambda and Vultr.

Powered by NVIDIA NVLink™ and NVSwitch™ high-speed interconnects, HGX H200 provides the highest performance on various application workloads, including LLM training and inference for the largest models beyond 175 billion parameters.

An eight-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory for the highest performance in generative AI and HPC applications.

When paired with NVIDIA Grace™ CPUs with an ultra-fast NVLink-C2C interconnect, the H200 creates the GH200 Grace Hopper Superchip with HBM3e — an integrated module designed to serve giant-scale HPC and AI applications.

Accelerate AI With NVIDIA Full-Stack Software

NVIDIA’s accelerated computing platform is supported by powerful software tools that enable developers and enterprises to build and accelerate production-ready applications from AI to HPC. This includes the NVIDIA AI Enterprise suite of software for workloads such as speech, recommender systems and hyperscale inference.

Availability

The NVIDIA H200 will be available from global system manufacturers and cloud service providers starting in the second quarter of 2024.

Watch Buck’s SC23 special address on Nov. 13 at 6 a.m. PT to learn more about the NVIDIA H200 Tensor Core GPU.

https://nvidianews.nvidia.com/news/nvidia-supercharges-hopper-the-worlds-leading-ai-computing-platform

컴퓨터/노트북/인터넷

IT 컴퓨터 기기를 좋아하는 사람들의 모임방

| 번호 | 분류 | 제목 | 조회 수 | 날짜 |

|---|---|---|---|---|

| 공지 | 뉴스 |

구글 최신 뉴스

|

1458 | 2024.12.12 |

| HOT글 | 일반 | 샤오미 BE6500 라우터 실사용 후기 (Wi-Fi 7 + 2.5G 스위치 기능까지 ㄷㄷ) 4 | 1025 | 2025.06.28 |

| 공지 | 🚨(뉴비필독) 전체공지 & 포인트안내 22 | 29108 | 2024.11.04 | |

| 공지 | URL만 붙여넣으면 끝! 임베드 기능 | 23140 | 2025.01.21 | |

| 10641 | 일반 | Synology의 4 베이 NAS 장비 "DiskStation DS416j" | 1465 | 2016.02.08 |

| 10640 | 일반 | 태블릿 PC의 충전을하면서 주변 기기를 사용할 수있는 OTG 지원 USB 허브 | 1446 | 2016.02.08 |

| 10639 | 일반 | 카페베네 상장 난항에 투자자 눈물 | 784 | 2016.02.15 |

| 10638 | 일반 | 주식, 욕심은 화를 부르고. 그 화는 고스란히 가족들에게 짜증을 부릴겁니다 | 798 | 2016.02.22 |

| 10637 | 일반 | 원익IPS 추천합니다 | 683 | 2016.02.22 |

| 10636 | 일반 | 장이 너무 안좋네요 ㅜㅜ | 716 | 2016.02.22 |

| 10635 | 일반 | 주식투자와 관련된 주식명언 | 1007 | 2016.02.22 |

| 10634 | 일반 | i5-6600 i5-6500 비교 1 | 1442 | 2016.02.22 |

| 10633 | 일반 | ssd좀봐주세요 2 | 916 | 2016.02.27 |

| 10632 | 일반 | 크라운제과 어떻게 보시나요? | 463 | 2016.02.28 |

| 10631 | 일반 | 흑자예상하며 기다린보람이 있군 | 477 | 2016.02.28 |

| 10630 | 일반 | 세계 주식 주요 지수 보는곳 입니다.모르시는분들을 위해 | 650 | 2016.02.28 |

| 10629 | 일반 | 한 2월 말쯤 총선테마가 시작될걸로 예상합니다. | 568 | 2016.02.28 |

| 10628 | 일반 | 대중관계 악화로 중국에서 돈버는 기업들 급락이네요 | 691 | 2016.02.28 |

| 10627 | 일반 | 주식 생초보인데 알려주실수 있으신가요? | 520 | 2016.02.28 |

| 10626 | 일반 | 11시정도만 잘 넘기면 될거같은데.. | 482 | 2016.02.28 |

| 10625 | 일반 | 요즘 한종목에 꽂혀서 분할매수하는데요. | 569 | 2016.02.28 |

| 10624 | 일반 | 본인 명의로 핸드폰 두개 개설 하면 문제 생기나요? 1 | 1163 | 2016.03.01 |

| 10623 | 일반 | 단말기대금 일시불납 가능한가요? 1 | 781 | 2016.03.05 |

| 10622 | 일반 | 노트4 배터리 공유?? 1 | 1749 | 2016.03.05 |

| 10621 | 일반 | 금호타이어 어떻게 보시나요? | 650 | 2016.03.05 |

| 10620 | 일반 | 해외에서 사용하던 도메인을 구입했는데 헉.. | 568 | 2016.03.09 |

| 10619 | 일반 | 축구 페널티킥 선방 탑10 | 469 | 2016.03.12 |

| 10618 | 일반 | SSD의 성능을 유지하기위한 유지 관리 기술 | 1071 | 2016.03.15 |

| 10617 | 일반 | 저렴한 Skylake 버전 Xeon 마더보드 'GA-X150M-PRO ECC」 | 880 | 2016.03.15 |

| 10616 | 일반 | G5 vs S7 1 | 629 | 2016.03.18 |

| 10615 | 일반 | 스테레오믹스 소리가 안납니다 1 | 1107 | 2016.03.19 |

| 10614 | 일반 | 스피커를 항상 켜 놓는데요. 노이즈??? 소리가 납니다. 1 | 853 | 2016.03.19 |

| 10613 | 일반 | M2 메모리 추천 1 | 915 | 2016.03.19 |

| 10612 | 일반 | 모니터 단자 HDMI, DP & 오디오 관련 문의 1 | 871 | 2016.03.19 |