컴퓨터/노트북/인터넷

IT 컴퓨터 기기를 좋아하는 사람들의 모임방

단축키

Prev이전 문서

Next다음 문서

단축키

Prev이전 문서

Next다음 문서

2023년 11월 슈퍼컴퓨터 성능 순위가 발표됐습니다.

1위는 프론티어입니다. AMD 에픽 3세대 64코어 프로세서에 인스팅트 MI250X 조합으로 1.679TFlops의 성능을 냅니다.

HIGHLIGHTS - NOVEMBER 2023

This is the 62nd edition of the TOP500.

The 62nd edition of the TOP500 shows five new or upgraded entries in the top 10 but the Frontier system still remains the only true exascale machine with an HPL score of 1.194 Exaflop/s.

The Frontier system at the Oak Ridge National Laboratory, Tennessee, USA remains the No. 1 system on the TOP500 and is still the only system reported with an HPL performance exceeding one Exaflop/s. Frontier brought the pole position back to the USA one year ago on the June 2022 listing and has since been remeasured with an HPL score of 1.194 Exaflop/s.

Frontier is based on the latest HPE Cray EX235a architecture and is equipped with AMD EPYC 64C 2GHz processors. The system has 8,699,904 total cores, a power efficiency rating of 52.59 gigaflops/watt, and relies on HPE’s Slingshot 11 network for data transfer.

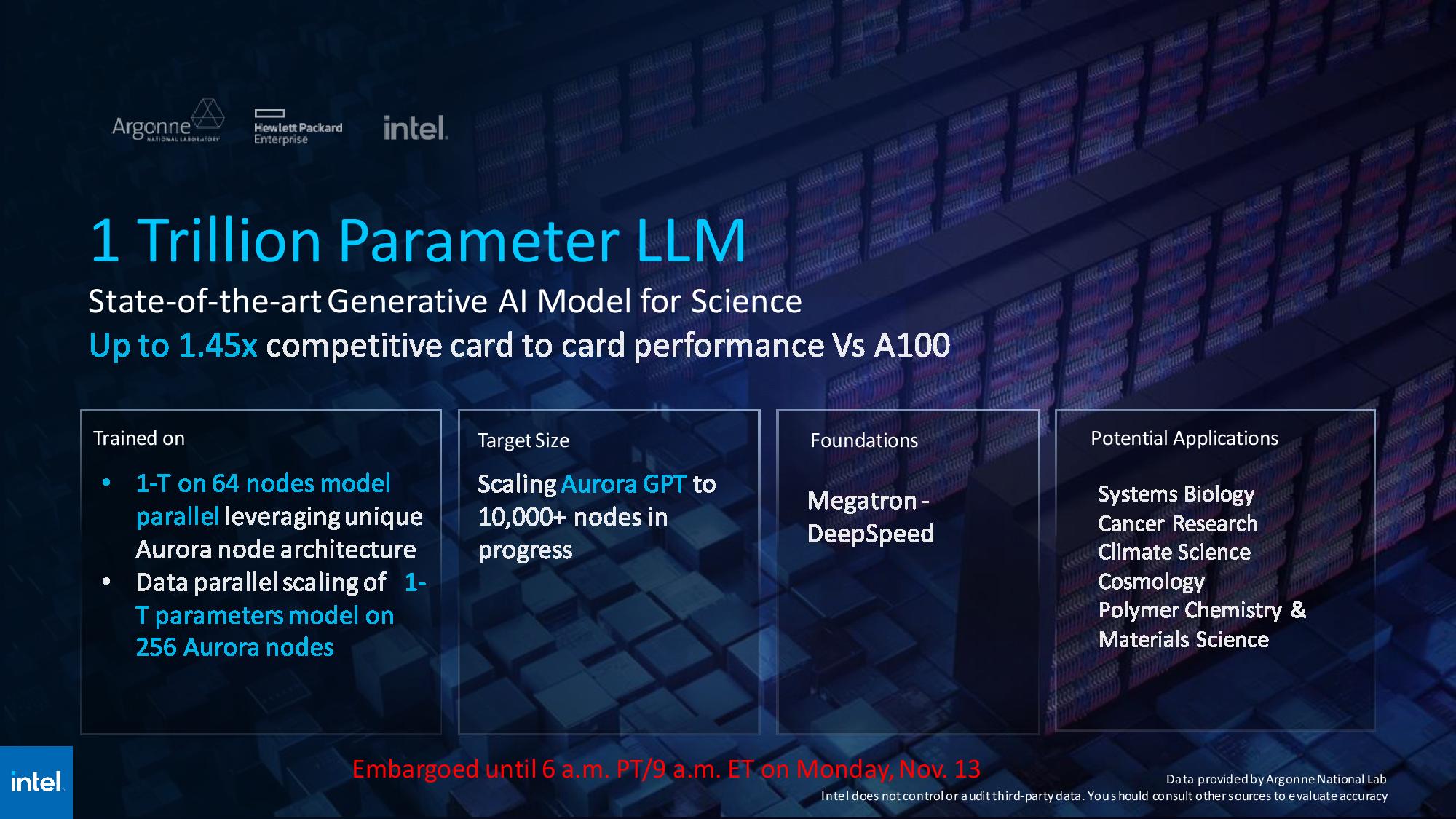

The Aurora system at the Argonne Leadership Computing Facility, Illinois, USA is currently being commissioned and will at full scale exceed Frontier with a peak performance of 2 Exaflop/s. It was submitted with a measurement on half of the final system achieving 585 Petaflop/s on the HPL benchmark which secured the No. 2 spot on the TOP500.

Aurora is built by Intel based on the HPE Cray EX - Intel Exascale Compute Blade which uses Intel Xeon CPU Max Series processors and Intel Data Center GPU Max Series accelerators which communicate through HPE’s Slingshot-11 network interconnect.

The Eagle system installed in the Microsoft Azure cloud in the USA is newly listed as No. 3. This Microsoft NDv5 system is based on Intel Xeon Platinum 8480C processors and NVIDIA H100 accelerators and achieved an HPL score of 561 Pflop/s.

The Fugaku system at the RIKEN Center for Computational Science (R-CCS) in Kobe, Japan is at No. 4 the first system listed outside of the USA. It previously held from June 2020 until November 2021 the No. 1 position on the TOP500. Its HPL benchmark score of 442 Pflop/s, is now only sufficient for the No. 4 spot.

The LUMI system at EuroHPC/CSC in Finland has been further upgraded and is now listed as No. 5 worldwide. It remains the largest system in Europe and is listed with and HPL score to now 380 Pflop/s.

Here is a summary of the system in the Top 10:

-

Frontier remains the No. 1 system in the TOP500. This HPE Cray EX system is the first US system with a performance exceeding one Exaflop/s. It is installed at the Oak Ridge National Laboratory (ORNL) in Tennessee, USA, where it is operated for the Department of Energy (DOE). It currently has achieved 1.194 Exaflop/s using 8,699,904 cores. The HPE Cray EX architecture combines 3rd Gen AMD EPYC™ CPUs optimized for HPC and AI, with AMD Instinct™ 250X accelerators, and a Slingshot-11 interconnect.

-

Aurora achieved the No. 2 spot by submitting an HPL score of 585 Pflop/s measured on half of the full system. It is installed at the Argonne Leadership Computing Facility, Illinois, USA, where it is also operated for the Department of Energy (DOE). This new Intel system is based on HPE Cray EX - Intel Exascale Compute Blades. It uses Intel Xeon CPU Max Series processors, Intel Data Center GPU Max Series accelerators, and a Slingshot-11 interconnect.

-

Eagle the new No. 3 system is installed by Microsoft in its Azure cloud. This Microsoft NDv5 system is based on Xeon Platinum 8480C processors and NVIDIA H100 accelerators and achieved an HPL score of 561 Pflop/s.

- Fugaku, the No. 4 system, is installed at the RIKEN Center for Computational Science (R-CCS) in Kobe, Japan. It has 7,630,848 cores which allowed it to achieve an HPL benchmark score of 442 Pflop/s.

-

The (again) upgraded LUMI system, another HPE Cray EX system installed at EuroHPC center at CSC in Finland is now the No. 5 with a performance of 380 Pflop/s. The European High-Performance Computing Joint Undertaking (EuroHPC JU) is pooling European resources to develop top-of-the-range Exascale supercomputers for processing big data. One of the pan-European pre-Exascale supercomputers, LUMI, is located in CSC’s data center in Kajaani, Finland.

-

The No. 6 system Leonardo is installed at a different EuroHPC site in CINECA, Italy. It is an Atos BullSequana XH2000 system with Xeon Platinum 8358 32C 2.6GHz as main processors, NVIDIA A100 SXM4 40 GB as accelerators, and Quad-rail NVIDIA HDR100 Infiniband as interconnect. It achieved a Linpack performance of 238.7 Pflop/s.

-

Summit, an IBM-built system at the Oak Ridge National Laboratory (ORNL) in Tennessee, USA, is now listed at the No. 7 spot worldwide with a performance of 148.8 Pflop/s on the HPL benchmark, which is used to rank the TOP500 list. Summit has 4,356 nodes, each one housing two POWER9 CPUs with 22 cores each and six NVIDIA Tesla V100 GPUs each with 80 streaming multiprocessors (SM). The nodes are linked together with a Mellanox dual-rail EDR InfiniBand network.

-

The MareNostrum 5 ACC system is new at No. 8 and installed at the EuroHPC/Barcelona Supercomputing Center in Spain. This BullSequana XH3000 system uses Xeon Platinum 8460Y processors with NVIDIA H100 and Infiniband NDR200. It achieved 183.2 Pflop/s HPL performance.

-

The new Eos system listed at No. 9 is a NVIDIA DGX SuperPOD based system at NVIDIA, USA. It is based on the NVIDIA DGX H100 with Xeon Platinum 8480C processors,N VIDIA H100 accelerators, and Infiniband NDR400 and it achieves 121.4 Pflop/s.

-

Sierra, a system at the Lawrence Livermore National Laboratory, CA, USA is at No. 10. Its architecture is very similar to the #7 system’s Summit. It is built with 4,320 nodes with two POWER9 CPUs and four NVIDIA Tesla V100 GPUs. Sierra achieved 94.6 Pflop/s.

Highlights from the List

-

A total of 186 systems on the list are using accelerator/co-processor technology, up from 184 six months ago. 78 of these use NVIDIA Ampere chips, 65 use NVIDIA Volta, and 11 systems with 17.

Count System Share (%) Rmax (TFlops) Rpeak (TFlops) Cores 1 NVIDIA Tesla V100 50 10 193,697 366,711 3,807,768 2 NVIDIA A100 27 5.4 329,415 494,544 3,340,224 3 NVIDIA A100 SXM4 40 GB 17 3.4 183,039 256,548 1,863,032 4 NVIDIA Tesla A100 80G 11 2.2 125,864 167,923 1,088,032 5 NVIDIA A100 SXM4 80 GB 11 2.2 64,250 75,189 582,720 6 AMD Instinct MI250X 10 2 1,741,739 2,445,340 12,660,800 7 NVIDIA Tesla V100 SXM2 10 2 88,521 177,436 1,997,584 8 NVIDIA Tesla A100 40G 9 1.8 60,912 98,037 642,884 9 NVIDIA Tesla P100 5 1 42,903 60,653 839,200 10 NVIDIA H100 5 1 590,169 891,871 1,194,024 11 NVIDIA Volta GV100 4 0.8 269,439 362,565 4,408,096 12 NVIDIA H100 SXM5 80 GB 4 0.8 176,375 290,081 743,888 13 Intel Data Center GPU Max 1550 2 0.4 23,335 59,843 199,872 14 Intel Data Center GPU Max 2 0.4 602,531 1,087,283 4,892,568 15 NVIDIA A100 SXM4 64 GB 2 0.4 242,205 309,448 1,849,824 16 NVIDIA Tesla K40 2 0.4 7,154 12,264 145,600 17 NVIDIA Tesla P100 NVLink 1 0.2 8,125 12,127 135,828 18 NVIDIA H100 64GB 1 0.2 138,200 265,574 680,960 19 Preferred Networks MN-Core 1 0.2 2,180 3,348 1,664 20 Nvidia Volta V100 1 0.2 21,640 29,354 347,776 21 NVIDIA HGX A100 80GB 500W 1 0.2 2,146 3,508 21,168 22 NVIDIA Tesla K80 1 0.2 2,592 3,799 66,000 23 Intel Xeon Phi 31S1P 1 0.2 2,071 3,075 174,720 24 Deep Computing Processor 1 0.2 4,325 6,134 163,840 25 Intel Xeon Phi 5110P 1 0.2 2,539 3,388 194,616 26 NVIDIA Tesla K20x 1 0.2 3,188 4,605 72,000 27 Matrix-2000 1 0.2 61,445 100,679 4,981,760 28 AMD Instinct MI210 64 GB 1 0.2 9,087 19,344 96,768 29 NVIDIA 2050 1 0.2 2,566 4,701 186,368 30 NVIDIA Tesla K40m 1 0.2 2,478 4,947 64,384 -

Intel continues to provide the processors for the largest share (67.60 percent) of TOP500 systems, down from 72.00 % six months ago. 140 (28.00 %) of the systems in the current list used AMD processors, up from 24.20 % six months ago.

-

The entry level to the list moved up to the 2.02 Pflop/s mark on the Linpack benchmark.

-

The last system on the newest list was listed at position 455 in the previous TOP500.

-

Total combined performance of all 500 exceeded the Exaflop barrier with now 7.03 exaflop/s (Eflop/s) up from 5.24 exaflop/s (Eflop/s) 6 months ago.

-

The entry point for the TOP100 increased to 7.89 Pflop/s.

-

The average concurrency level in the TOP500 is 212,627 cores per system up from 190,919 six months ago.

General Trends

Installations by countries/regions:

| Count | System Share (%) | Rmax (TFlops) | Rpeak (TFlops) | Cores | ||

|---|---|---|---|---|---|---|

| 1 | United States | 161 | 32.2 | 3,725,851 | 5,711,149 | 40,077,020 |

| 2 | China | 104 | 20.8 | 407,240 | 770,545 | 22,605,992 |

| 3 | Germany | 36 | 7.2 | 256,266 | 391,133 | 4,292,044 |

| 4 | Japan | 32 | 6.4 | 669,834 | 866,458 | 11,374,380 |

| 5 | France | 23 | 4.6 | 173,226 | 249,513 | 3,967,832 |

| 6 | United Kingdom | 15 | 3 | 81,707 | 142,086 | 2,048,112 |

| 7 | Italy | 12 | 2.4 | 351,758 | 463,800 | 4,427,376 |

| 8 | South Korea | 12 | 2.4 | 151,323 | 207,845 | 2,021,812 |

| 9 | Netherlands | 10 | 2 | 86,851 | 150,917 | 921,120 |

| 10 | Canada | 10 | 2 | 41,208 | 71,912 | 845,984 |

| 11 | Brazil | 9 | 1.8 | 55,709 | 106,041 | 868,096 |

| 12 | Saudi Arabia | 7 | 1.4 | 90,911 | 138,590 | 2,676,084 |

| 13 | Russia | 7 | 1.4 | 73,715 | 101,737 | 741,328 |

| 14 | Australia | 6 | 1.2 | 44,908 | 63,185 | 565,824 |

| 15 | Sweden | 6 | 1.2 | 47,601 | 68,808 | 408,256 |

| 16 | Norway | 5 | 1 | 15,955 | 24,964 | 425,920 |

| 17 | Taiwan | 5 | 1 | 31,595 | 43,027 | 559,728 |

| 18 | Poland | 4 | 0.8 | 13,813 | 20,495 | 224,064 |

| 19 | Ireland | 4 | 0.8 | 11,394 | 22,450 | 290,560 |

| 20 | India | 4 | 0.8 | 19,453 | 25,252 | 325,832 |

| 21 | Singapore | 3 | 0.6 | 9,038 | 15,786 | 234,112 |

| 22 | Switzerland | 3 | 0.6 | 26,667 | 36,407 | 584,992 |

| 23 | Spain | 3 | 0.6 | 184,773 | 322,242 | 1,559,936 |

| 24 | Finland | 3 | 0.6 | 391,388 | 546,193 | 3,116,992 |

| 25 | Czechia | 2 | 0.4 | 9,589 | 12,914 | 163,584 |

| 26 | Austria | 2 | 0.4 | 5,038 | 6,809 | 133,152 |

| 27 | Slovenia | 2 | 0.4 | 6,918 | 10,047 | 156,480 |

| 28 | Bulgaria | 2 | 0.4 | 7,045 | 9,154 | 164,224 |

| 29 | Luxembourg | 2 | 0.4 | 12,807 | 18,291 | 172,544 |

| 30 | Morocco | 1 | 0.2 | 3,158 | 5,015 | 71,232 |

| 31 | Argentina | 1 | 0.2 | 3,876 | 5,991 | 43,008 |

| 32 | United Arab Emirates | 1 | 0.2 | 7,257 | 9,492 | 107,568 |

| 33 | Belgium | 1 | 0.2 | 2,717 | 3,094 | 23,200 |

| 34 | Hungary | 1 | 0.2 | 3,105 | 4,508 | 27,776 |

HPC manufacturer:

| Count | System Share (%) | Rmax (TFlops) | Rpeak (TFlops) | Cores | ||

|---|---|---|---|---|---|---|

| 1 | Lenovo | 169 | 33.8 | 607,475 | 1,102,594 | 15,455,216 |

| 2 | HPE | 103 | 20.6 | 2,450,983 | 3,433,844 | 30,023,316 |

| 3 | EVIDEN | 48 | 9.6 | 687,601 | 1,044,501 | 9,740,576 |

| 4 | Inspur | 34 | 6.8 | 77,234 | 192,784 | 1,661,600 |

| 5 | DELL EMC | 32 | 6.4 | 184,767 | 314,595 | 3,588,956 |

| 6 | Nvidia | 18 | 3.6 | 334,354 | 464,638 | 2,368,288 |

| 7 | NEC | 12 | 2.4 | 70,990 | 99,067 | 725,072 |

| 8 | Fujitsu | 12 | 2.4 | 566,894 | 717,062 | 9,803,088 |

| 9 | Sugon | 9 | 1.8 | 21,988 | 43,119 | 637,056 |

| 10 | MEGWARE | 7 | 1.4 | 22,774 | 32,680 | 372,840 |

| 11 | Penguin Computing, Inc. | 6 | 1.2 | 19,409 | 29,686 | 569,368 |

| 12 | IBM | 6 | 1.2 | 201,915 | 273,679 | 3,292,832 |

| 13 | Microsoft Azure | 6 | 1.2 | 150,370 | 224,747 | 1,328,640 |

| 14 | NUDT | 3 | 0.6 | 66,082 | 108,454 | 5,342,848 |

| 15 | ACTION | 3 | 0.6 | 7,994 | 63,814 | 178,368 |

| 16 | Huawei Technologies Co., Ltd. | 2 | 0.4 | 5,872 | 9,390 | 101,184 |

| 17 | Intel | 2 | 0.4 | 590,953 | 1,069,119 | 4,870,328 |

| 18 | Liqid | 2 | 0.4 | 5,393 | 7,518 | 102,144 |

| 19 | IBM / NVIDIA / Mellanox | 2 | 0.4 | 112,840 | 148,759 | 1,860,768 |

| 20 | Quanta Computer / Taiwan Fixed Network / ASUS Cloud | 2 | 0.4 | 11,298 | 19,563 | 220,752 |

| 21 | YANDEX, NVIDIA | 2 | 0.4 | 37,550 | 50,051 | 328,352 |

| 22 | Cray Inc./Hitachi | 2 | 0.4 | 11,461 | 18,250 | 271,584 |

| 23 | NRCPC | 1 | 0.2 | 93,015 | 125,436 | 10,649,600 |

| 24 | NEC / DELL | 1 | 0.2 | 6,708 | 7,627 | 125,440 |

| 25 | ASUSTeK / ASUS Cloud / Taiwan Web Service Corporation | 1 | 0.2 | 3,533 | 4,000 | 62,496 |

| 26 | Preferred Networks | 1 | 0.2 | 2,180 | 3,348 | 1,664 |

| 27 | Supermicro | 1 | 0.2 | 3,700 | 6,024 | 85,568 |

| 28 | Amazon Web Services | 1 | 0.2 | 9,950 | 15,107 | 172,692 |

| 29 | MEGWARE / Supermicro | 1 | 0.2 | 9,087 | 19,344 | 96,768 |

| 30 | Nebius AI | 1 | 0.2 | 46,540 | 86,792 | 218,880 |

| 31 | Fujitsu / Lenovo | 1 | 0.2 | 9,264 | 15,142 | 204,032 |

| 32 | Netweb Technologies | 1 | 0.2 | 8,500 | 13,170 | 81,344 |

| 33 | NVIDIA, Inspur | 1 | 0.2 | 12,810 | 20,029 | 130,944 |

| 34 | ClusterVision / Hammer | 1 | 0.2 | 2,969 | 4,335 | 64,512 |

| 35 | Lenovo/IBM | 1 | 0.2 | 2,814 | 3,578 | 86,016 |

| 36 | Atipa Technology | 1 | 0.2 | 2,539 | 3,388 | 194,616 |

| 37 | Self-made | 1 | 0.2 | 3,307 | 4,897 | 60,512 |

| 38 | Format sp. z o.o. | 1 | 0.2 | 5,051 | 7,709 | 47,616 |

| 39 | Microsoft | 1 | 0.2 | 561,200 | 846,835 | 1,123,200 |

Interconnect Technologies:

| Count | System Share (%) | Rmax (TFlops) | Rpeak (TFlops) | Cores | ||

|---|---|---|---|---|---|---|

| 1 | Infiniband | 219 | 43.8 | 2,907,744 | 4,352,058 | 33,362,748 |

| 2 | Gigabit Ethernet | 209 | 41.8 | 3,092,269 | 4,917,199 | 38,035,068 |

| 3 | Omnipath | 33 | 6.6 | 166,863 | 248,630 | 3,748,420 |

| 4 | Custom Interconnect | 30 | 6 | 331,560 | 496,015 | 21,875,448 |

| 5 | Proprietary Network | 9 | 1.8 | 533,405 | 645,722 | 9,291,776 |

Processor Technologies:

| Count | System Share (%) | Rmax (TFlops) | Rpeak (TFlops) | Cores | ||

|---|---|---|---|---|---|---|

| 1 | Intel Cascade lake | 137 | 27.4 | 477,852 | 932,186 | 11,253,276 |

| 2 | Intel Skylake | 95 | 19 | 344,621 | 655,060 | 7,839,400 |

| 3 | AMD Zen-2 (Rome) | 69 | 13.8 | 719,594 | 1,006,710 | 11,863,896 |

| 4 | AMD Zen-3 (Milan) | 66 | 13.2 | 2,090,713 | 2,957,925 | 20,296,256 |

| 5 | Intel Ice Lake | 36 | 7.2 | 405,639 | 591,413 | 4,899,532 |

| 6 | Intel Sapphire Rapids | 25 | 5 | 1,663,979 | 2,752,425 | 10,358,728 |

| 7 | Intel Broadwell | 18 | 3.6 | 69,439 | 86,885 | 2,048,008 |

| 8 | Intel Haswell | 11 | 2.2 | 55,575 | 75,864 | 1,653,660 |

| 9 | Fujitsu ARM | 8 | 1.6 | 530,839 | 641,021 | 9,105,408 |

| 10 | Intel IvyBridge | 7 | 1.4 | 82,953 | 132,610 | 5,841,172 |

| 11 | Intel Xeon Phi | 7 | 1.4 | 80,727 | 153,898 | 3,502,580 |

| 12 | Power | 7 | 1.4 | 311,567 | 417,833 | 5,081,600 |

| 13 | NEC Vector Engine | 5 | 1 | 47,143 | 60,692 | 208,064 |

| 14 | AMD Zen-4 (Genoa) | 5 | 1 | 48,755 | 55,442 | 1,167,456 |

| 15 | ShenWei | 1 | 0.2 | 93,015 | 125,436 | 10,649,600 |

| 16 | Intel Nehalem | 1 | 0.2 | 2,566 | 4,701 | 186,368 |

| 17 | X86_64 | 1 | 0.2 | 4,325 | 6,134 | 163,840 |

Green500

- The data collection and curation of the Green500 project has been integrated with the TOP500 project. This allows submissions of all data through a single webpage at http://top500.org/submit

-

The system to claim the No. 1 spot for the GREEN500 is Henri at Flatiron Institute in the US. With 8,288 total cores and an HPL benchmark of 2.88 PFlop/s. Henri is a Lenovo ThinkSystem SR670 with Intel Xeon Platinum and Nvidia H100.

-

In the second place is the Frontier Test & Development System (TDS) at ORNL in the US. With 120,832 total cores and an HPL benchmark of 19.2 PFlop/s, the Frontier TDS machine is basically just one rack identical to the actual Frontier system.

-

The No. 3 spot was taken by the Adastra system. A HPE Cray EX235a system with AMD EPYC and AMD Instinct MI250X.

HPCG Results

- The Top500 list now includes the High-Performance Conjugate Gradient (HPCG) Benchmark results.

-

Supercomputer Fugaku remains the leader on the HPCG benchmark with 16 PFlop/s.

-

The DOE system Frontier at ORNL claims the second position with 14.05 HPCG-Pflop/s.

-

The third position was captured by the upgraded LUMI system with 4.59 HPCG-petaflops.

HPL-MxP Results

On the HPL-MxP (formally HPL-AI) benchmark, which measures performance for mixed-precision calculation, Frontier already demonstrated 9.95 Exaflops!

The HPL-MxP benchmark seeks to highlight the use of mixed precision computations. Traditional HPC uses 64-bit floating point computations. Today we see hardware with various levels of floating point precisions, 32-bit, 16-bit, and even 8-bit. The HPL-MxP benchmark demonstrates that by using mixed precision during the computation much higher performance is possible (see the Top 5 from the HPL-MxP benchmark), and using mathematical techniques, the same accuracy can be computed with the mixed precision technique when compared with straight 64-bit precision.

| Rank (HPL-MxP) | Site | Computer | Cores | HPL-MxP (Eflop/s) | TOP500 Rank | HPL Rmax (Eflop/s) | Speedup of HPL-MxP over HPL |

|---|---|---|---|---|---|---|---|

|

1 |

DOE/SC/ORNL, USA |

Frontier, HPE Cray EX235a |

8,699,904 |

9.950 |

1 |

1.1940 |

8.3 |

|

2 |

EuroHPC/CSC, Finland |

LUMI, HPE Cray EX235a |

2,752,704 |

2.350 |

5 |

0.3797 |

6.18 |

|

3 |

RIKEN, Japan |

Fugaku, Fujitsu A64FX |

7,630,848 |

2.000 |

4 |

0.4420 |

4.5 |

|

4 |

EuroHPC/CINECA, Italy |

Leonardo, Bull Sequana XH2000 |

1,824,768 |

1.842 |

6 |

0.2387 |

7.7 |

|

5 |

DOE/SC/ORNL, USA |

Summit, IBM AC922 POWER9 |

2,414,592 |

1.411 |

7 |

0.1486 |

9.5 |

About the TOP500 List

The first version of what became today’s TOP500 list started as an exercise for a small conference in Germany in June 1993. Out of curiosity, the authors decided to revisit the list in November 1993 to see how things had changed. About that time they realized they might be onto something and decided to continue compiling the list, which is now a much-anticipated, much-watched and much-debated twice-yearly event.

https://www.top500.org/lists/top500/2023/11/highs/